We finished the project in fine fashion and were pretty happy with the results. It’s a full interactive experience with the projections and musical notes that change as the hands move across the air. I liked how the Markov chain was choosing each of the next notes, allowing the user to improvise the amount of notes that were played over the backing track. I see a lot of potential to take this forward as a performance piece. Here is an example below that uses tracking to place the hands on the user.

Final Project Update 2

This week I got the musical interactivity working with the Kinect data. In this way, you can play the instrument by waving your hands in the air and the notes will always be in pitch. By tracking the horizontal location of the hands, we can map out a grid across which the hands move and trigger new notes in each new zone of the grid. These triggers are fed into a Markov chain which intelligently selects the next note so that the performer can focus on phrasing and respond to the notes that they hear played back without concern for playing a “wrong” note.

ISSUES: At the end of the video, you can see that using both hands causes the instrument to overload with notes. I did some troubleshooting and am thinking that this is a result of the OSC buffer getting full and expelling its cue of notes. I have also not heard back since reaching out again to my friend at the Clive Davis Institute to use the Leslie speaker, so we are planning contingencies which include virtual instruments and effects.

The Markov Chain was an interesting experiment. In past projects, I locked the musical interaction to a scale that would only move linearly as the data increased or decreased. This was fun but got tiresome quickly as the interactivity was too straight ahead. To combat this, I needed a way to skip notes without adding any interaction. There are ways to do this mathematically, by say querying random indexes from a list of notes in a scale, but this felt too random. I wanted to retain some level of musicality which is why the weighted selection that Markov chain provides is more musical. I used the ml.markov object in MaxMSP.

Below is a sample of the visual element of our project which my project partner Morgan Mueller did a lot of work on. The scale of the shapes is mapped to the position of the hands and the size of the particles are triggered to grow as each new note is triggered by the performer.

Final Project Update

Team Members: David Azar, Morgan Mueller, & Billy Bennett

We are aiming to build a musical and historical experience which would allow those with little to no musical experience to achieve a high level of musical performance while educating them on a fascinating piece of 1950’s musical technology that is still widely used today.

Our project will feature the following hardware technologies:

1. Microsoft Kinect

2. Arduino MKR 1010 Wifi

3. Hall Effect sensor or magnet sensor

4. Leslie speaker with pedal

5. digital projector

Our project will use the following software technologies and algorithms:

1. OpenFrameworks

2. MaxMSP

3. Arduino IDE

4. Ableton Live

5. OSC (Open Sound Control)

6. Markov Chains

7. FFT for Audio Frequency Extraction

This week we focused on getting the following aspects of the project together:

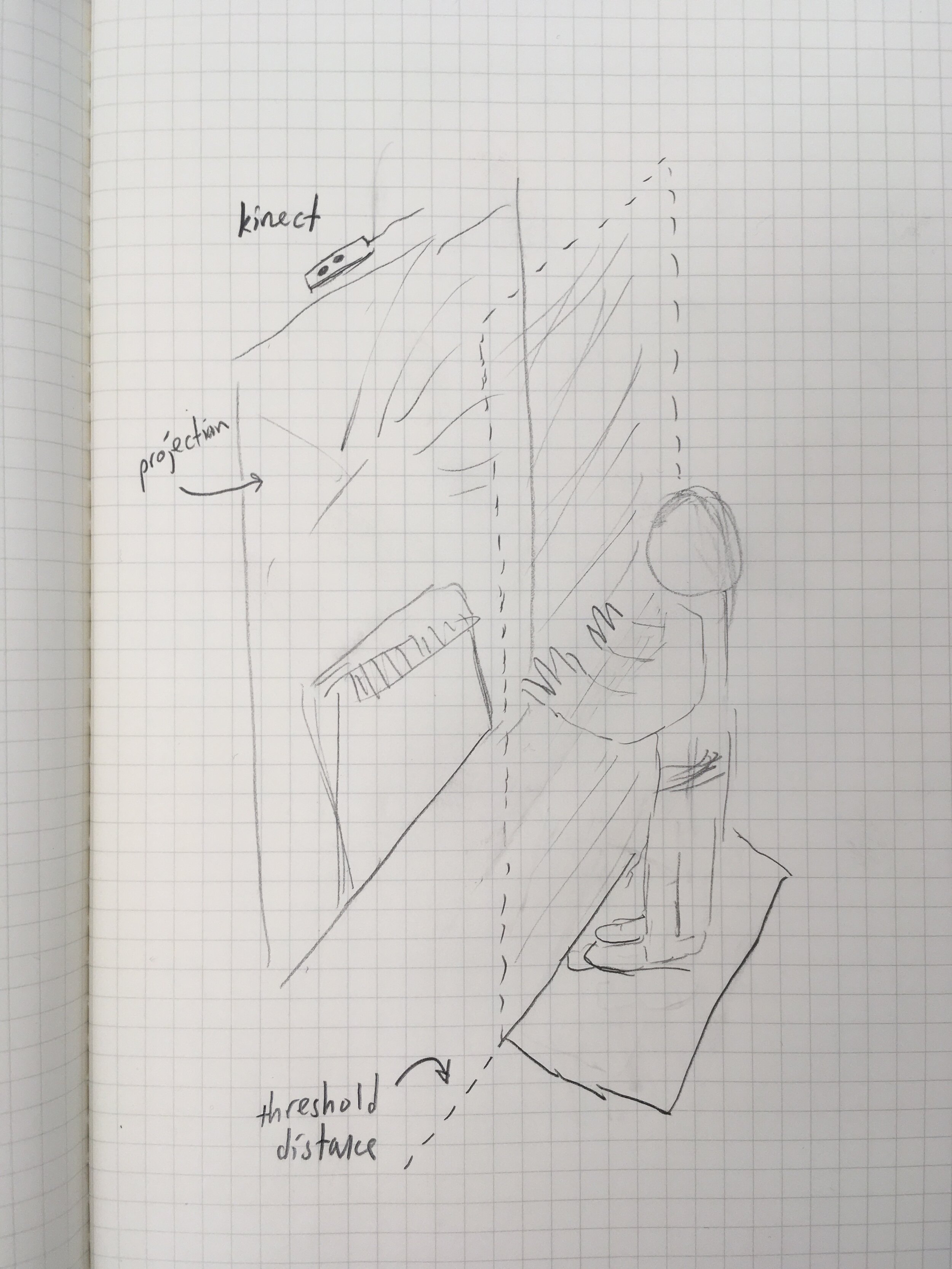

Mapping out a space for users to stand which can be a threshold beyond which users can extend their hands into the tracking area which will trigger the keyboard sounds.

Determining the visual presentation of the project based on the musical elements and the physical look of the Leslie speaker. Further considerations include the layout and decor of the room as well as the color palette and geometric properties of the graphics used in the projection.

Considering a method for tracking the rate at which the Leslie speaker rotates to add a visual element that enhances users ability to understand the unique sound that the speaker produces.

Making another visual element that triggers each time a note is played to give the user better feedback as to their control over the sound.

For the visual aesthetic, we want to have something warm that matches the colors of the Hammond and Leslie. We looked at a number of living room designs from the 1970’s and found a few that even had an organ as a part of the decor.

Here is a drawing of the performance area in which the user will stand to play the instrument.

Below is a rendering of what the final visual interface may look like. We thought it would be helpful for the user to have icons for their hands that mirror the blob movement and actions on th keyboard. The hands will hover over the top of a projected Hammond organ keyboard where the keys will be “played”. This is to give the user some context as to what sounds they are hearing and some familiarity on how to move. Here is a demo of the projection with a Hammond and icons for the hands.

Here is a photo of the rough layout of the room. Users enter from the right and approach the Leslie and performance rectangle.

Once the user enters the room, they can approach the performance area rectangle and begin to play the instrument by waving their hands in the air. The more the user moves their hands, the more notes will be played. In order to facilitate a meaningful musical performance, the musical notes must be pre-determined to some degree, yet flexible enough to give the user the perception that they are freely playing the instrument. The notes that the user will play will be be determined by use of a Markov Chain which will intelligently choose each sequential note within the key. The Markov probabilities will be trained on sequences of notes from the Ethiopian piano song “The Homeless Wanderer” by Tsegue-Maryam Guebrou. This song is especially useful as many of the musical passages are monophonic, meaning there is only one note played at a time, making the model easier to train and giving users a simpler model on which to improvise. We will use the sigmund~ object in MaxMSP for note extraction and feed a list of extracted notes into the ml.markov object.

The musical inspiration for this project comes from Ethiopian Jazz. We will recreate a backing track to the tune “Minlbelesh” by Hailu Mergia & Dahlak Band. This will play underneath the user’s notes improvised hand movements as described above.

Here is a rough sketch of the magnet sensor which will be be placed on to the Leslie speaker. Each time the magnet passes the sensor, an Arduino MKR1010 will output an OSC message over Wifi to the host computer. These messages will be used to trigger a visual response to give the user more sensory feedback as they hear the Leslie speaker effects.

All of the projections will be controlled in MaxMSP, taking cues from the body movements of the users and the spinning of the Leslie speaker.

Final Project Proposal

Our team would like to bring a rare piece of mid-20th century musical technology from the past and make it accessible to people today - no musical ability required! The Leslie speaker was first developed in 1941 by Donald Leslie to be used in conjunction with the Hammond Organ. His design included a pinning pair of speakers or horns to create its signature warbling sound which was meant to mimic a church or pipe organ.

One fine example of the type of sound this organ and speaker combination make is the song “Minlbelesh” by Hailu Mergia and Dahlak Band. Mergia uses this organ sound to great effect, and we would like to mimic this style. But instead of having the user play a keyboard, we would like to have them simply move their body and dance to a backing track. This would be where the Kinect comes in. By sending blob position data to MaxMSP and Ableton, we would recreate a similar sound to Mergia’s organ and pipe that back out into the Leslie speaker. We are aiming to get one of the Leslie’s from the Clive Davis Institute studios on the 5th and 6th floor. There will also be visual and projected aspect that will match the analog, wooden nature of the Leslie as exhibited here.

The musical

For the musical interaction, we will use a similar method of movement as exhibited in this musical wand that I designed last semester. As the the wand moves by certain increments, musical notes are output by the device to a software synthesizer. The notes are set to a pre-determined scale. For our project here, the movement will come in the form of a human dancing in front of a Kinect.